The Paul Scherrer Institut (PSI) maintains two 100 Gbit/s direct links to the Swiss National Supercomputing Centre (CSCS). Over these links, data from the central data catalogue are regularly transmitted from Villigen to Lugano. The datasets stem from the Photon Science facilities SLS (synchrotron) and SwissFEL (X-ray laser) in the form of so called datasets. Each such dataset is uniquely identified with a globally unique persistent identifier (PID). The data are tagged with meta data and are archived in a Petabyte Archive System. The Petabyte Archive system is responsible for packaging, archiving and retrieving the datasets within a tape-based long-term storage system located at CSCS. These transmissions add up to around 4 Petabytes per year, while they expect to reach 50-70 Petabytes per year in the future. This amounts for 17.8Gbps of network transfer sustained, or 71.2Gbps transfer one fourth of the time, over the course of a year. Without control on which paths such large data volumes traverse, even a high-speed 100Gbps link can easily become congested.

The technology in place is based on a commercial high-performance archival system by IBM. The engineers at PSI maintain a buffer in case the data transmissions is stalling. NFS and AFS are employed to synchronize data between PSI and CSCS.

The current test project is using a 10Gbps firewalled connection between PSI and CSCS, and the goal was to reach 5-7 Gbit/s with an improved sending host. After both PSI and CSCS were linked up to the SCION network as part of the SCI-ED project, the work on an improved file transfer setup began in Fall 2020 and the first successful tests were run before the year was over. The current solution bottleneck resides at the loading process (around 5Gbps), and could further be optimized with faster disks. Only looking at the performance of SCION and Hercules, the speed is 6.7Gbps.

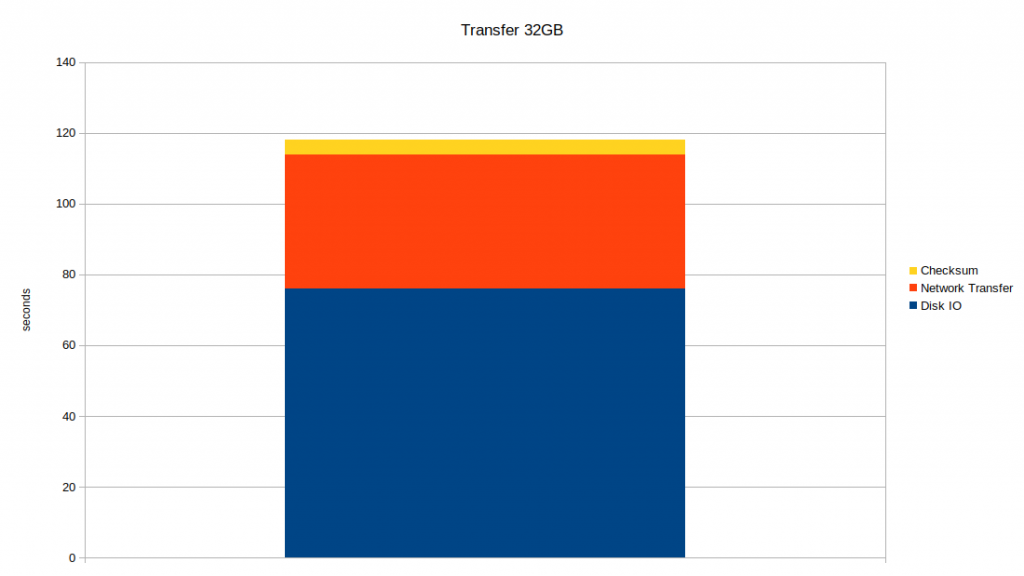

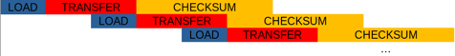

The solution is based on Hercules, which splits large files into 32GB blocks for the transmission and verification. The files can be arbitrarily large (petabytes). Hercules transfers data from source to destination disk at 4.7Gbps sustained, and 6.7Gbps if only the network transfer is taken into account. Through parallelization across several machines, the speed can readily scale up to larger data volumes.

Overview of the transmission pipeline

Time used per 32GB block